Chapter - Generative Adversarial Networks (GANs)

In this section of the book I will cover Generative Adversarial Networks (GANs). Generative Adversarial Networks (GANs) (Goodfellow et al. 2014) are a first attempt at creating generative models. In the context of games, GANs are modeled as a two player adversarial games. One of the biggest challenges faced with supervised learning is annotating the data. We cannot annotate automatically and without annotations we cannot train our learning models. But what if we could substitute the annotation of the data for something else? For instance, what if we could model the annotation task as a game or use other previous knowledge about the world as labels. These ideas are one of the main motivations for GANs. GANs are deep neural networks that consist of a generator network connected to a discriminator network. The discriminator network has training data and the generator network only has random or noise data as input. GANs are essentially 2 player games where one player (the generator) creates synthetic data samples, while the second player (the discriminator) takes the generated sample and performs a classification. This classification is performed to determine if the synthetic sample is similar to the distribution of the discriminator's training data. Since both networks are connected, the deep neural network (GAN) can learn to generate better synthetic samples with the help of the discriminator’s feedback. Basically, the discriminator tells the generator how to adjust its weights to produce better synthetic samples. Generative Adversarial Networks are methods that use 2 deep neural networks to interact with each other and generate data. Its formulation is consistent with 2 player adversarial game frameworks. One of the 2 algorithms (or networks) tries to learn a data distribution and produce new samples similar to the samples in the real data (the generator). The second algorithm (the discriminator) is a classifier that tries to determine if the new samples generated by the generative algorithm are fake or real. These 2 algorithms work together to achieve an optimal outcome of producing better output samples from the Generator. The generator in a GAN is based on Auto-encoders. Therefore, before looking at GANs, we will look at the Auto-encoder

Copyright and License

All rights reserved. No part of this work may be reproduced or transmitted in any form or by any means, without written permission of the copyright owner.

MIT License.

FTC and Amazon Disclaimer

This post/page/article includes Amazon Affiliate links to products. This site receives income if you purchase through these links.

This income helps support content such as this one.

Generative Adversarial Networks

In this section of the book I will cover Generative Adversarial Networks (GANs). Generative Adversarial Networks (GANs) (Goodfellow et al. 2014) are

a first attempt at creating generative models. In the context of games, GANs are modeled as a two player adversarial games. One of the biggest challenges

faced with supervised learning is annotating the data. We cannot annotate automatically and without annotations we cannot train our learning models. But what

if we could substitute the annotation of the data for something else? For instance, what if we could model the annotation task as a game or use other previous

knowledge about the world as labels. These ideas are one of the main motivations for GANs.

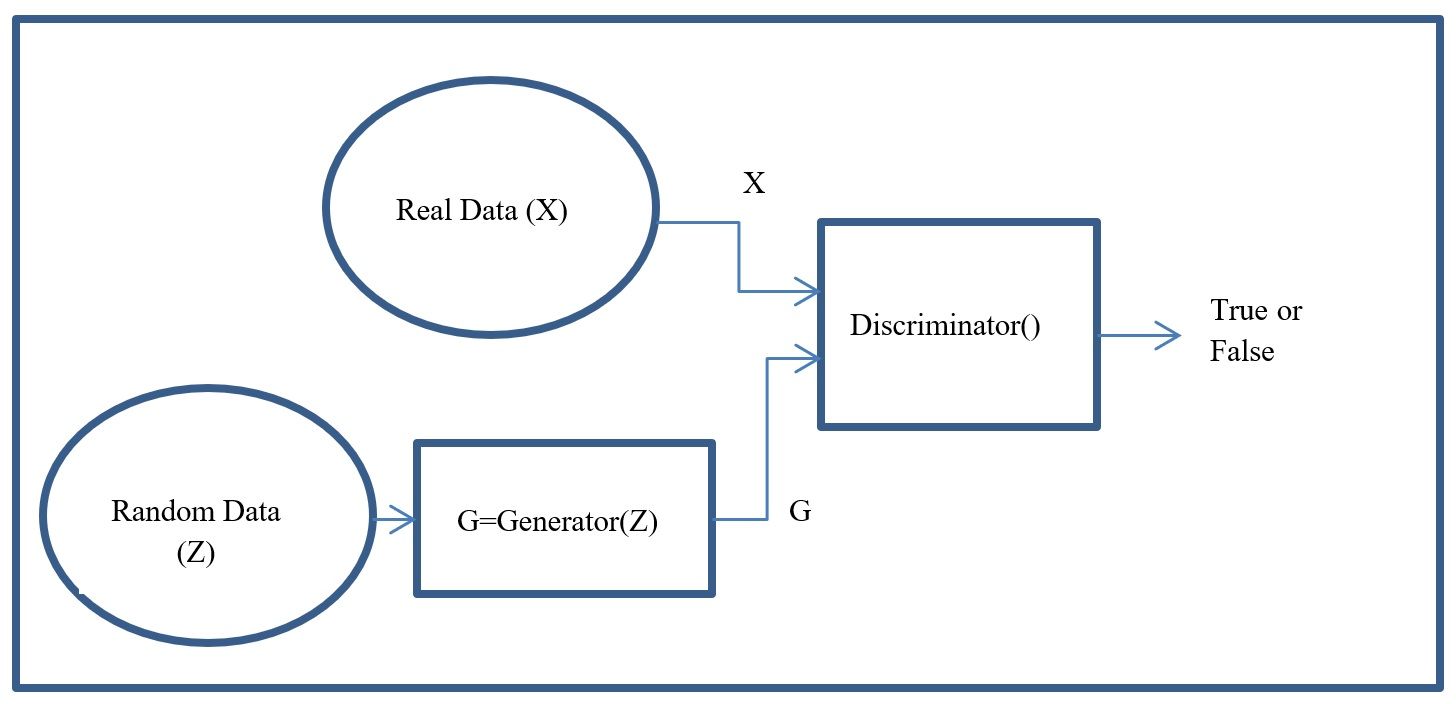

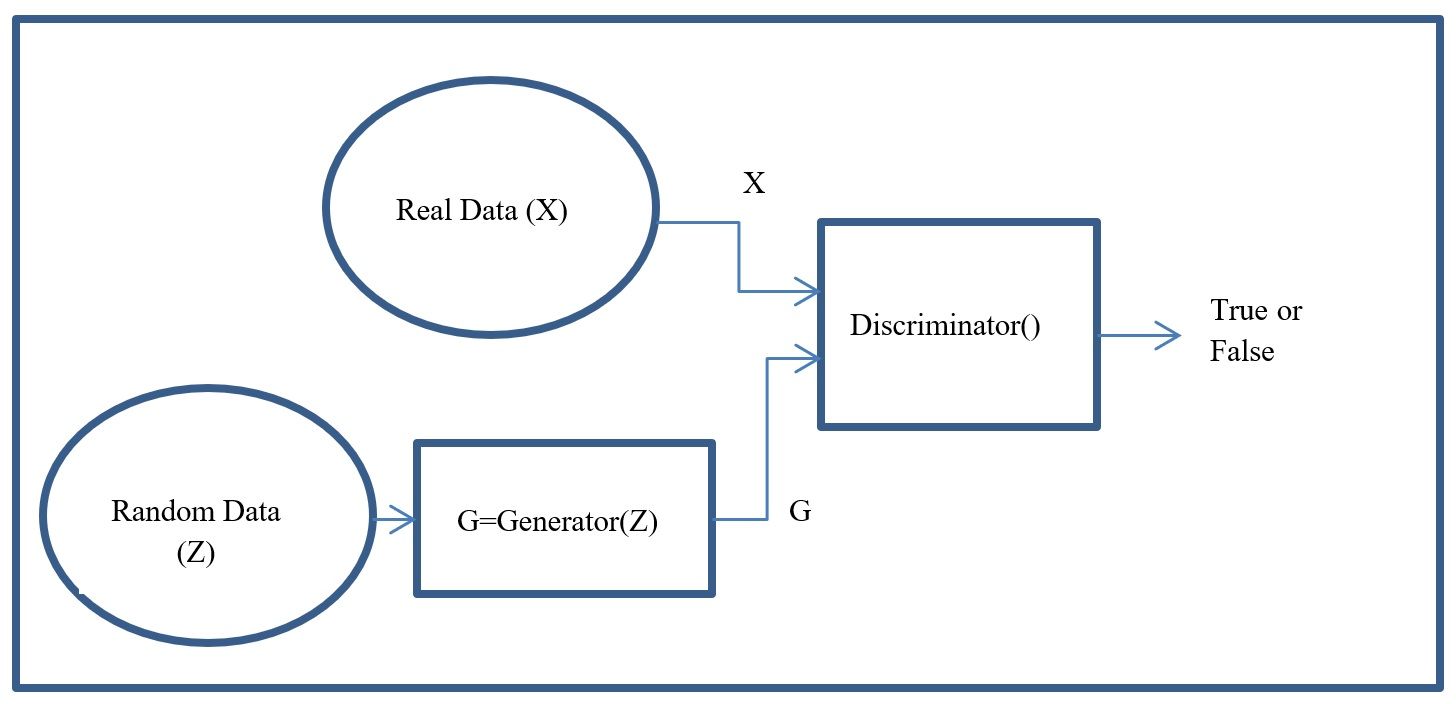

GANs are deep neural networks that consist of a generator network connected to a discriminator network. The discriminator network has training data and the generator

network only has random or noise data as input. GANs are essentially 2 player games where one player (the generator) creates synthetic data samples, while the

second player (the discriminator) takes the generated sample and performs a classification.

This classification is performed to determine if the synthetic sample is similar to the distribution of the discriminator's training data. Since both networks are

connected, the deep neural network (GAN) can learn to generate better synthetic samples with the help of the discriminator’s feedback. Basically, the discriminator

tells the generator how to adjust its weights to produce better synthetic samples.

Generative Adversarial Networks are methods that use 2 deep neural networks to interact with each other and generate data. Its formulation is consistent with 2 player

adversarial game frameworks. One of the 2 algorithms (or networks) tries to learn a data distribution and produce new samples similar to the samples in

the real data (the generator). The second algorithm (the discriminator) is a classifier that tries to determine if the new samples generated by the generative

algorithm are fake or real. These 2 algorithms work together to achieve an optimal outcome of producing better output samples from the Generator.

The generator in a GAN is based on Auto-encoders. Therefore, before looking at GANs, we will look at the Auto-encoder

Autoencoders

Autoencoders are a type of compression method where a neural network learns how to represent a vector of size “m” into a vector of size “n” where m >> n. Here, the input and output vectors in the network are the original sample and the reproduced sample and the hidden layer of the network is the new compressed representation of the input vector. The objective function minimizes the difference/distance between the original input sample and the reproduced output sample.

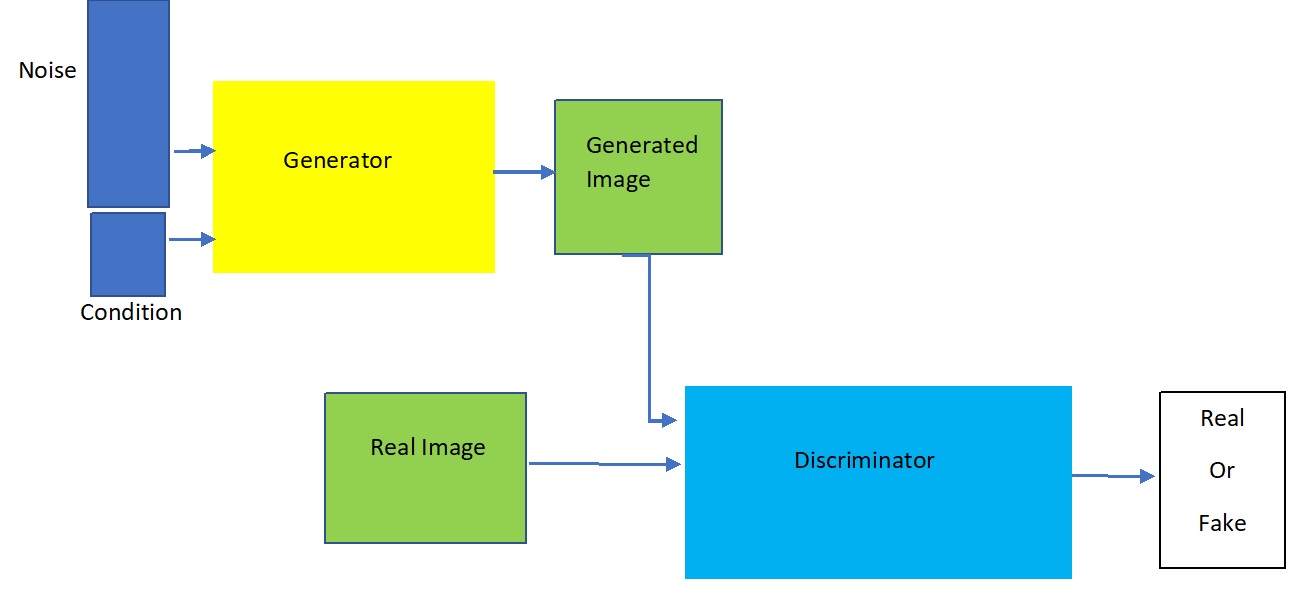

Original GAN

The original GAN consists of a generator and a Discriminator and was proposed in a 2014 paper by Ian Goodfellow. The general architecture of the GAN can be seen in the figure below.

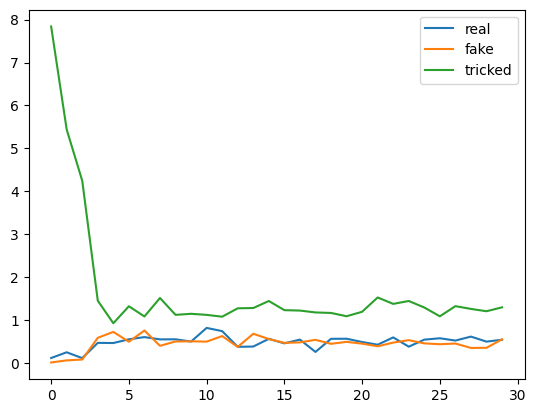

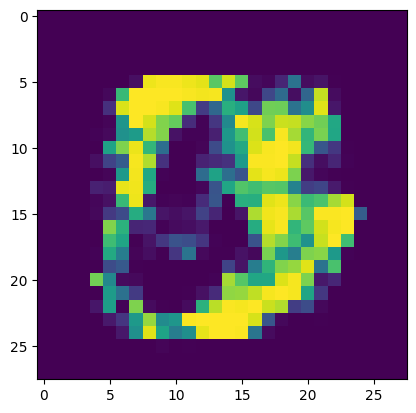

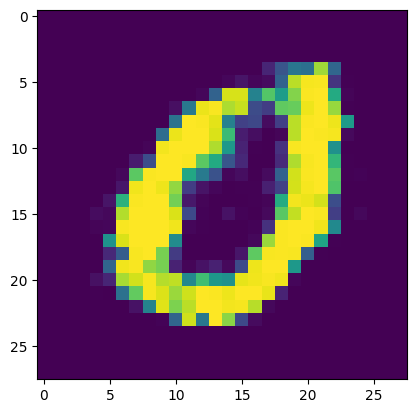

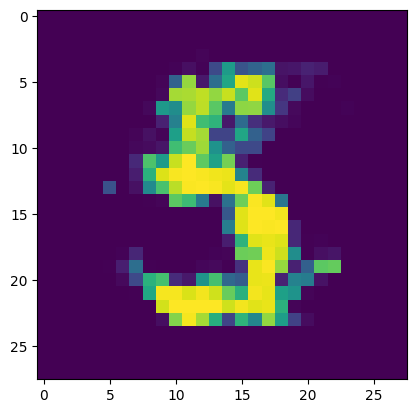

Generating MNIST digits with GANs

In this section, I will describe how to implement a GAN that can generate images. The algorithm will work with the MNIST data set. As always,

the code can be downloaded from my GitHub.

First we import the libraries.

We load the MNIST data in a similar way to what we did in previous chapters

and the GAN training function is

Conditional GANs

The conditional GAN (CGAN) can generate more than random images from a distribution. Instead, in the case on MNIST, for example, it can generate the image of an image given the corresponding label for the image. The architecture for the CGAN can be seen below.

Summary

In this chapter, a description of Generative Adversarial Networks was provided. Some sample code was addressed as well as some applications of GANs.